This keeps cropping up time and time again.

Unfortunately every now and again a new term or buzzword comes along that gets taken as a holy grail term. Two that come to mind right now are log and raw. Neither log, nor raw, are magic bullet solutions that guarantee the best performance. Used incorrectly or inappropriately both can result in inferior results. In addition there are many flavours of log and raw each with very different performance ranges.

A particular point in case is the 12 bit raw available from several of Sony’s mid range large sensor cameras, the FS700, FS7 and FS5.

Raw can be either log or linear. This particular flavour of raw is encoded using linear data. If it is linear then each successively brighter stop of exposure should be recorded with twice as many code values or shades as the previous stop. This accurately replicates the change in the light in the scene you are shooting. If you make the scene twice as bright, you need to record it with twice as much data. Every time you go up a stop in exposure you are doubling the light in the scene. 12 bit linear raw is actually very rare, especially from a camera with a high dynamic range. To my knowledge, Sony are the only company that offer 14 stops of dynamic range using 12 bit linear data.

There’s actually a very good reason for this: Strictly speaking, it’s impossible! Here’s why: For each stop we go up in exposure we need twice as many code values. With 12 bit data there are a maximum of 4096 code values, this is not enough to record 14 stops.

If stop 1 uses 1 code value, stop 2 will use 2, stop 3 will use 4, stop 4 will use 8 and so on.

STOP: CODE VALUES: TOTAL CODE VALUES REQUIRED.

+1 1 1

+2 2 3

+3 4 8

+4 8 16

+5 16 32

+6 32 64

+7 64 128

+8 128 256 Middle Grey

+9 256 512

+10 512 1,024

+11 1,024 2,048

+12 2,048 4,096

+13 4,096 8,192

+14 8,192 16,384

As you can see from the above if we only have 12 bit data and as a result 4096 code values to play with, we can only record an absolute maximum of 12 stops of dynamic range using linear data. To get even 12 stops we must record the first couple of stops with an extremely small amount of tonal information. This is why most 14 stop raw cameras use 16 bit data for linear or use log encoded raw data for 12 bit, where each stop above middle grey (around stop +8) is recorded with the same amount of data.

So how are Sony doing it on the FS5, FS7 etc? I suspect (I’m pretty damn certain in fact) that Sony use something called floating point math. In essence what they do is take the linear data coming off the sensor and round the values recorded to the nearest 4 or 8. So, stop +14 is now only recorded with 2,048 values, stop +13 with 512 values etc. This is fine for the brighter stops where there are hundreds or even thousands of values, it has no significant impact on the brighter parts of the final image. But in the darker parts of the image it does have an impact as for example stop +5 which starts off with 16 values ends up only being recorded with 4 values and each stop below this only has 1 or two discreet levels. This results in blocky and often noisy looking shadow areas – a common complaint with 12 bit linear raw. I don’t know for a fact that this is what they are doing. But if you look at what they need to do, the options available and you look at the end results for 12 bit raw, this certainly appears to be the case.

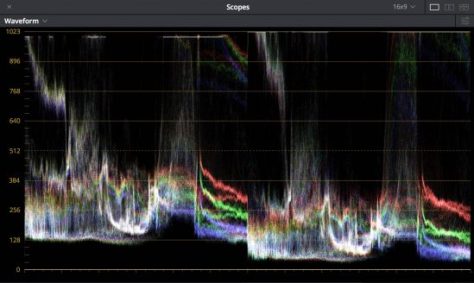

Meanwhile a camera like the FS7 which can record 10 bit log will retain the full data range in the shadows because if you use log encoding, the brighter stops are each recorded with the same amount of data. With S-Log2 and 10 bit XAVC-I the FS7 uses approx 650 code values to record the 6 brightest stops in it’s capture range reserving approx 250 code values for the 8 darkest stops. Compare this to the linear example above and in fact what you will see is that 10 bit S-Log2 has as much data as you would expect to find in a straight 16 bit linear recording below middle grey (S-Log 3 actually reserves slightly more data for the shadows). BUT that’s for 16 bit. Sony’s 12 bit raw is squeezing 14 stops into what should be an impossibly small number of code values, so in practice what I have found is that 10 bit S-log has noticeably more data in the shadows than 12 bit raw.

In the highlights 12 bit linear raw will have more data than 10 bit S-log2 and S-Log3 and this is borne out in practice where a brightly exposed raw image will give amazing results with beautiful highlights and mid range. But if your 12 bit raw is dark or underexposed it is not going to perform as well as you might expect. For dark and low key scenes 10 bit S-Log is most likely going to give a noticeably better image. (Note: 8 bit S-log2/3 as you would have from an FS5 in UHD only has a quarter of the data that 10 bit has. The FS5 records the first 8 stops in 8 bit S-log 2 with approx 64 code values, S-Log3 is only marginally better at approx 80 code values. 12 bit linear outperforms 8 bit log across the entire range).

Sony’s F5 and F55 cameras record to the R5 and R7 recorders using 16 bit linear data. 16 bit data is enough for 14 stops. But I believe that Sony still use floating point math for 16 bit recording. This time instead of using the floating point math to make room for an otherwise impossible dynamic range they use it to take a little bit of data from the brightest stop to give extra code values in the shadows. When you have 16,384 code values to play with you can afford to do that. This then adds a lot of extra tonal values and shades to the shadows compared to 10 bit log and as a result 16 bit linear raw will outperform 10 bit log across the entire exposure range by a fairly large margin.

So there you have it. I know it’s hugely confusing sometimes. Not all types of raw are created equal. It’s really important to understand this stuff if you’re buying a camera. Just because it has raw it doesn’t necessarily mean an automatic improvement in image quality in every shooting situation. Log can be just as good or possibly even better in some situations, raw better in others. There are reasons why cameras like the F5/R5 cost more than a FS5/Shogun/Odyssey.